kubeadm-ubuntu 主机规划

主机IP

主机名称

配置

172.16.10.81

k8s-master01

Ubuntu 20.04.1 LTS,2核CPU,8G内存,40G系统盘

172.16.10.82

k8s-master02

Ubuntu 20.04.1 LTS,2核CPU,8G内存,40G系统盘

172.16.10.83

k8s-master03

Ubuntu 20.04.1 LTS,2核CPU,8G内存,40G系统盘

172.16.10.84

k8s-node01

Ubuntu 20.04.1 LTS,2核CPU,8G内存,40G系统盘

安装基础软件包,各个节点操作 1 2 3 4 5 6 7 8 9 10 11 # 更新apt包索引 apt-get update # 安装软件包以允许apt通过HTTPS使用存储库 apt-get -y install \ apt-transport-https \ ca-certificates \ curl \ gnupg-agent \ software-properties-common \ ntpdate

环境操作(所有节点)

安装docker(所有节点) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 # 添加Docker的官方GPG密钥 curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - # 搜索指纹的后8个字符,验证您现在是否拥有带有指纹的密钥 sudo apt-key fingerprint 0EBFCD88 # 安装add-apt-repository工具 apt-get -y install software-properties-common # 添加稳定的存储库 add-apt-repository \ "deb [arch=amd64] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) \ stable" # 更新apt包索引 apt-get update # 查看Docker版本 apt-cache madison docker-ce # 安装Docker apt-get -y install docker-ce=5:19.03.12~3-0~ubuntu-focal docker-ce-cli=5:19.03.12~3-0~ubuntu-focal containerd.io # 查看Docker信息 docker info # 解决问题:WARNING: No swap limit support(操作系统下docker不支持内存限制的警告) 在基于RPM的系统上不会发生此警告,该系统默认情况下启用这些功能。 vi /etc/default/grub 添加或编辑GRUB_CMDLINE_LINUX行以添加这两个键值对"cgroup_enable=memory swapaccount=1", 最终效果: GRUB_CMDLINE_LINUX="cgroup_enable=memory swapaccount=1 net.ifnames=0 vga=792 console=tty0 console=ttyS0,115200n8 noibrs" ### 执行命令更新grub并重启机器 update-grub reboot # 修改docker配置文件,配置镜像加速器 cat > /etc/docker/daemon.json << EOF { "oom-score-adjust": -1000, "log-driver": "json-file", "log-opts": { "max-size": "100m", "max-file": "3" }, "max-concurrent-downloads": 10, "insecure-registries": ["0.0.0.0/0"], "max-concurrent-uploads": 10, "registry-mirrors": ["https://dockerhub.azk8s.cn"], "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ] } EOF systemctl daemon-reload && systemctl restart docker && systemctl enable docker

安装kubernetes1.17.3高可用集群 安装kubeadm,kubelet,kubectl(所有节点) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 #下载 gpg 密钥 curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - #添加 k8s 镜像源 cat <<EOF >/etc/apt/sources.list.d/kubernetes.list deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF # 更新apt包索引 sudo apt-get update # 查看kubeadm版本 apt-cache madison kubeadm #安装对应kubeadm,kubelet,kubectl版本 sudo apt-get install -y kubelet=1.17.3-00 kubeadm=1.17.3-00 kubectl=1.17.3-00 #查看kubeadm版本 kubeadm version

kubeadm命令详解 1 2 3 4 5 6 7 8 9 kubeadm config upload from-file:由配置文件上传到集群中生成ConfigMap。 kubeadm config upload from-flags:由配置参数生成ConfigMap。 kubeadm config view:查看当前集群中的配置值。 kubeadm config print init-defaults:输出kubeadm init默认参数文件的内容。 kubeadm config print join-defaults:输出kubeadm join默认参数文件的内容。 kubeadm config migrate:在新旧版本之间进行配置转换。 kubeadm config images list:列出所需的镜像列表。 kubeadm config images pull:拉取镜像到本地。 kubeadm reset :卸载服务

搭建haproxy+keepalived高可用集群 安装keepalived(master节点) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 -> k8s-master01 sudo apt-get install keepalived -y #编辑Keepalived配置文件 vi /etc/keepalived/keepalived.conf global_defs { router_id LVS_DEVEL } vrrp_instance VI_1 { state BACKUP nopreempt interface eth0 virtual_router_id 80 priority 100 advert_int 1 authentication { auth_type PASS auth_pass just0kk } virtual_ipaddress { 192.168.10.88 #VIP地址 } } systemctl start keepalived && systemctl enable keepalived -> k8s-master02 sudo apt-get install keepalived -y #编辑Keepalived配置文件 vi /etc/keepalived/keepalived.conf global_defs { router_id LVS_DEVEL } vrrp_instance VI_1 { state BACKUP nopreempt interface eth0 virtual_router_id 80 priority 50 advert_int 1 authentication { auth_type PASS auth_pass just0kk } virtual_ipaddress { 192.168.10.88 #VIP地址 } } systemctl start keepalived && systemctl enable keepalived -> k8s-master03 sudo apt-get install keepalived -y #编辑Keepalived配置文件 vi /etc/keepalived/keepalived.conf global_defs { router_id LVS_DEVEL } vrrp_instance VI_1 { state BACKUP nopreempt interface eth0 virtual_router_id 80 priority 30 advert_int 1 authentication { auth_type PASS auth_pass just0kk } virtual_ipaddress { 192.168.10.88 #VIP地址 } } systemctl start keepalived && systemctl enable keepalived

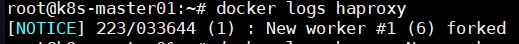

安装haproxy(HAProxy节点) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 # 编辑haproxy配置文件 cat >> /root/haproxy.cfg <<EOF global log 127.0.0.1 local0 log 127.0.0.1 local1 notice maxconn 4096 daemon defaults log global mode http option httplog option dontlognull retries 3 option redispatch timeout connect 5000 timeout client 50000 timeout server 50000 frontend stats-front bind *:8081 mode http default_backend stats-back frontend fe_k8s_6444 bind *:6444 mode tcp timeout client 1h log global option tcplog default_backend be_k8s_6443 acl is_websocket hdr(Upgrade) -i WebSocket acl is_websocket hdr_beg(Host) -i ws backend stats-back mode http balance roundrobin stats uri /haproxy/stats stats auth pxcstats:secret backend be_k8s_6443 mode tcp timeout queue 1h timeout server 1h timeout connect 1h log global balance roundrobin server k8s-master01 192.168.10.81:6443 server k8s-master02 192.168.10.82:6443 server k8s-master03 192.168.10.83:6443 EOF #docker启动haproxy docker run --name haproxy -v /root/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro --restart=always --net=host -d haproxy #验证 docker logs haproxy #显示New worker #1 forked

在master1节点初始化k8s集群 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cat > kubeadm-config.yaml <<EOF apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.17.3 controlPlaneEndpoint: "192.168.10.88:6444" apiServer: certSANs: - 192.168.10.81 - 192.168.10.82 - 192.168.10.83 - 192.168.10.84 - 192.168.10.88 networking: podSubnet: 10.244.0.0/16 imageRepository: "registry.cn-hangzhou.aliyuncs.com/google_containers" --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs EOF #初始化集群 kubeadm init --config kubeadm-config.yaml

显示如下,说明初始化成功了

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 To start using your cluster, you need torun the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to thecluster. Run "kubectl apply -f [podnetwork].yaml"with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of workernodes by running the following on each as root: kubeadm join 192.168.10.88:6444 --token 34lypv.r9czddehwscnwrgg \ --discovery-token-ca-cert-hash sha256:44adbf1427b9a034ac1eac131bd7a3a4c868439fe067b158bad68b9336c24607 \ --control-plane

注 :kubeadm join … 这条命令需要记住,下面我们把k8s的master2、master3,在下面会用到

在master1节点执行如下,这样才能有权限操作k8s资源 1 2 3 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf$HOME/.kube/config sudo chown $(id -u):$(id -g)$HOME/.kube/config

在master1节点执行kubectl get nodes STATUS为NotReady,因为还未安装网络插件

1 2 NAME STATUS ROLES AGE VERSION master1 NotReady master 2m13s v1.17.3

master1节点安装网络插件calico 1 kubectl apply -f https://docs.projectcalico.org/v3.8/manifests/calico.yaml

安装calico之后,在master1节点执行kubectl get nodes

1 2 NAME STATUS ROLES AGE VERSION master1 Ready master 2m13s v1.17.3

把master1节点的证书拷贝到master2和master3上 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 # master2,master3创建相关文件夹 cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/ # 在master1节点把证书拷贝到master2和master3上,在master1上操作 scp /etc/kubernetes/pki/ca.crt k8s-master02:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/ca.key k8s-master02:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/sa.key k8s-master02:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/sa.pub k8s-master02:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-master02:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/front-proxy-ca.key k8s-master02:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/etcd/ca.crt k8s-master02:/etc/kubernetes/pki/etcd/ scp /etc/kubernetes/pki/etcd/ca.key k8s-master02:/etc/kubernetes/pki/etcd/ scp /etc/kubernetes/pki/ca.crt k8s-master03:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/ca.key k8s-master03:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/sa.key k8s-master03:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/sa.pub k8s-master03:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-master03:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/front-proxy-ca.key k8s-master03:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/etcd/ca.crt k8s-master03:/etc/kubernetes/pki/etcd/ scp /etc/kubernetes/pki/etcd/ca.key k8s-master03:/etc/kubernetes/pki/etcd/ # 证书拷贝之后在master2和master3上执行如下命令,形成集群 kubeadm join 192.168.10.88:6444 --token 34lypv.r9czddehwscnwrgg \ --discovery-token-ca-cert-hash sha256:44adbf1427b9a034ac1eac131bd7a3a4c868439fe067b158bad68b9336c24607 \ --control-plane #--control-plane:这个参数表示加入到k8s集群的是master节点 # 在master2和master3上操作: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf$HOME/.kube/config sudo chown $(id -u):$(id -g)$HOME/.kube/config # kubectl get nodes 显示如下: NAME STATUS ROLES AGE VERSION master1 Ready master 39m v1.17.3 master2 Ready master 5m9s v1.17.3 master3 Ready master 2m33s v1.17.3

把node1节点加入到k8s集群,在node节点操作 1 2 kubeadm join 192.168.10.88:6444 --token 34lypv.r9czddehwscnwrgg \ --discovery-token-ca-cert-hash sha256:44adbf1427b9a034ac1eac131bd7a3a4c868439fe067b158bad68b9336c24607

检查集群状况 1 2 3 4 5 6 7 8 # 检查组件状态是否正常 kubectl get componentstatuses # 查看集群系统信息 kubectl cluster-info # 查看核心组件是否运行正常(Running) kubectl -n kube-system get pod

证书替换 查看证书有效时间 1 2 3 # 查看ca证书有效期 openssl x509 -in /etc/kubernetes/pki/ca.crt -noout -text |grep Not 可看到ca证书有效期是10年

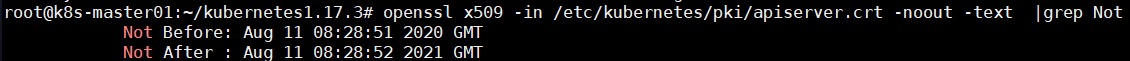

1 2 3 # 查看apiserver证书有效期 openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text |grep Not 可看到apiserver证书有效期是1年

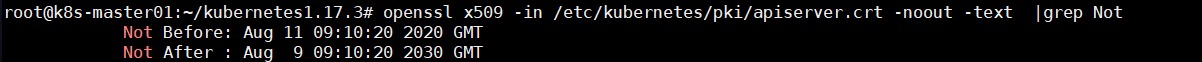

延长证书过期时间 1 2 3 4 5 6 7 8 9 # 把update-kubeadm-cert.sh文件上传到master1、master2、master3节点 git clone https://github.com/judddd/kubernetes1.17.3.git chmod +x update-kubeadm-cert.sh # 将所有组件证书延迟至10年 ./update-kubeadm-cert.sh all #验证 openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text |grep Not

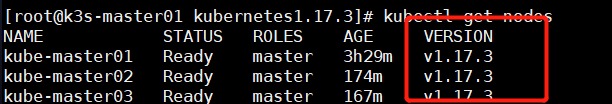

kubernetes集群升级 查看当前版本

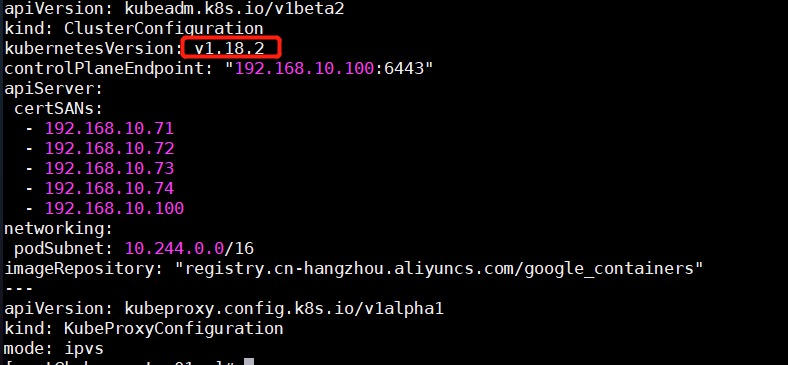

master节点编辑kubeadm-config-upgrade.yaml 1 2 3 4 ssh k8s-master01 cp kubeadm-config.yaml kubeadm-config-upgrade.yaml vi kubeadm-config-upgrade.yaml #将Kubernetes版本改为1.18.2

1 2 scp kubeadm-config-upgrade.yaml root@k8s-master02:/root/ scp kubeadm-config-upgrade.yaml root@k8s-master03:/root/

所有节点升级kubeadm,kubelet,kubectl 1 sudo apt-get install -y kubelet=1.18.2-00 kubeadm=1.18.2-00 kubectl=1.18.2-00

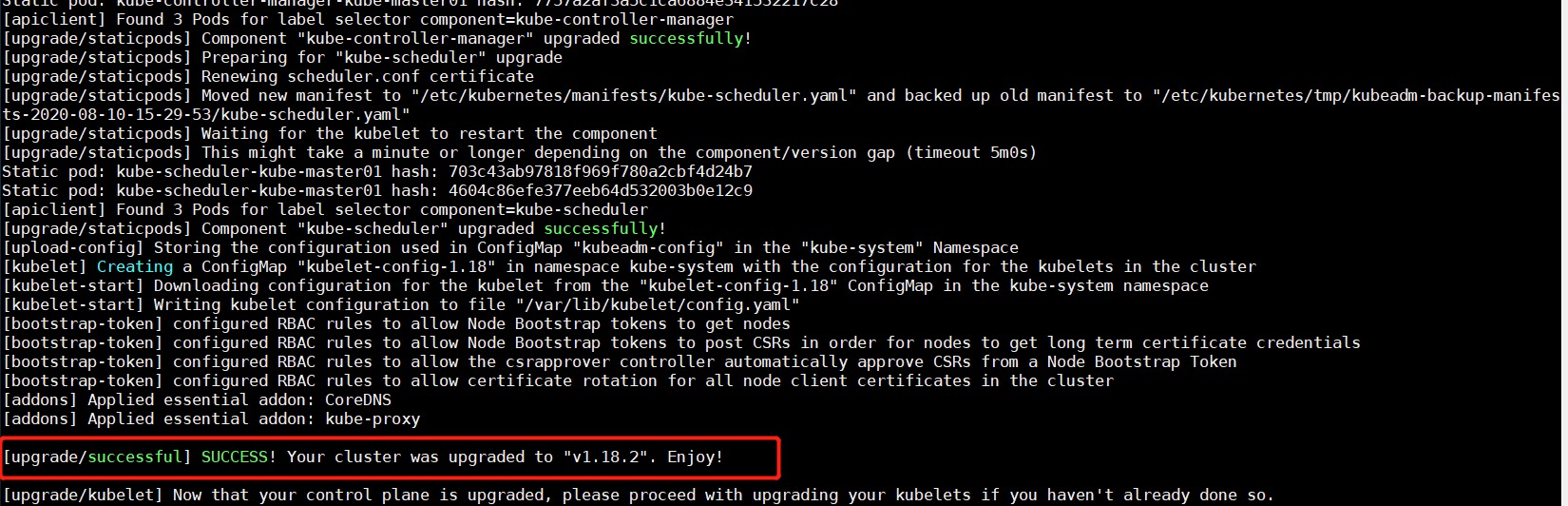

master节点升级Kubernetes集群 1 2 # 三个master节点执行 kubeadm upgrade apply --config=kubeadm-config-upgrade.yaml

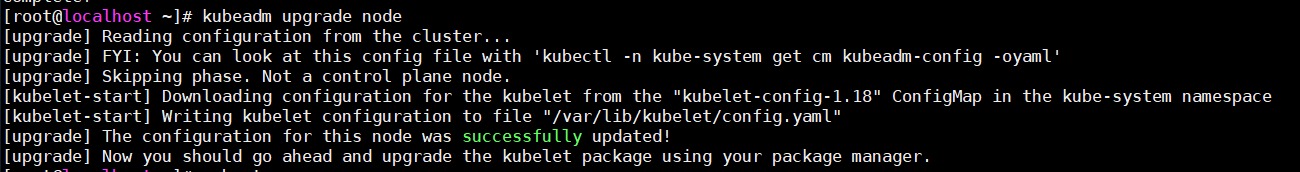

node节点升级 1 2 #node节点执行 kubeadm upgrade node

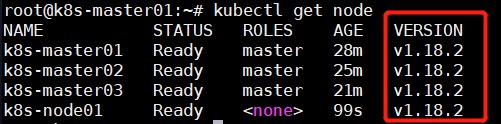

所有节点升级成功后,若未正常显示版本,可重启节点

升级成功

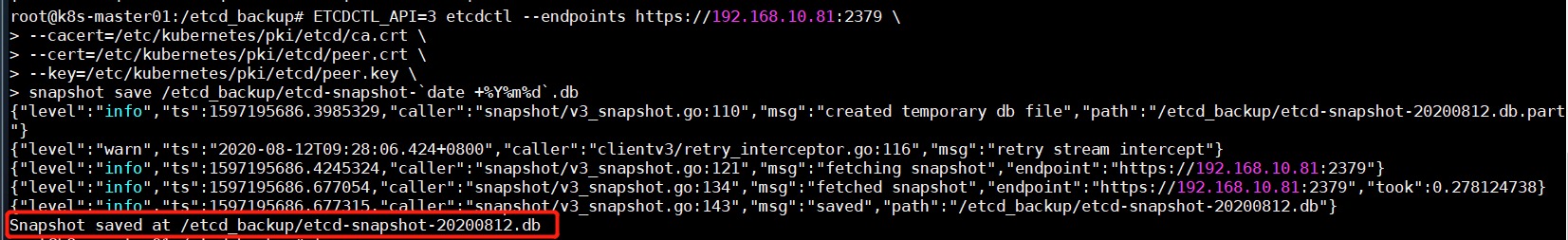

ETCD数据备份恢复 手动备份 1 2 3 4 5 6 7 8 9 10 11 12 # 拷贝etcd容器的etcdctl命令到宿主机本地的 /usr/bin/下 docker cp k8s_etcd_etcd-master01_kube-system_32144e70958a19d4b529ed946b3e2726_1:/usr/local/bin/etcdctl /usr/bin #创建备份目录 mkdir /etcd_backup/ #开始备份 ETCDCTL_API=3 etcdctl --endpoints https://192.168.10.81:2379 \ --cacert=/etc/kubernetes/pki/etcd/ca.crt \ --cert=/etc/kubernetes/pki/etcd/peer.crt \ --key=/etc/kubernetes/pki/etcd/peer.key \ snapshot save /etcd_backup/etcd-snapshot-`date +%Y%m%d`.db

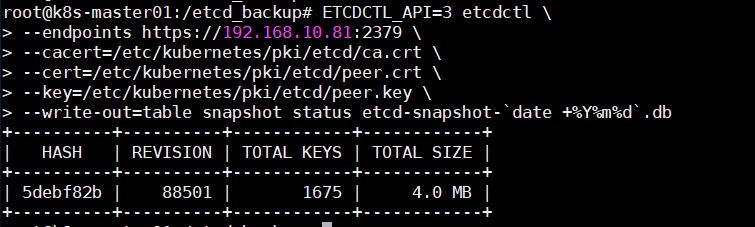

查看备份数据的状态 1 2 3 4 5 6 ETCDCTL_API=3 etcdctl \ --endpoints https://192.168.10.81:2379 \ --cacert=/etc/kubernetes/pki/etcd/ca.crt \ --cert=/etc/kubernetes/pki/etcd/peer.crt \ --key=/etc/kubernetes/pki/etcd/peer.key \ --write-out=table snapshot status /etcd_backup/etcd-snapshot-`date +%Y%m%d`.db

拷贝etcdctl以及备份数据到其他master节点中 1 2 3 4 5 scp /usr/bin/etcdctl root@k8s-master02:/usr/bin/ scp /etcd_backup/etcd-snapshot-`date +%Y%m%d`.db root@k8s-master02:/root/ scp /usr/bin/etcdctl root@k8s-master03:/usr/bin/ scp /etcd_backup/etcd-snapshot-`date +%Y%m%d`.db root@k8s-master03:/root/

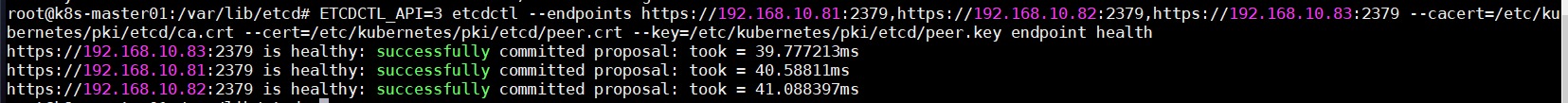

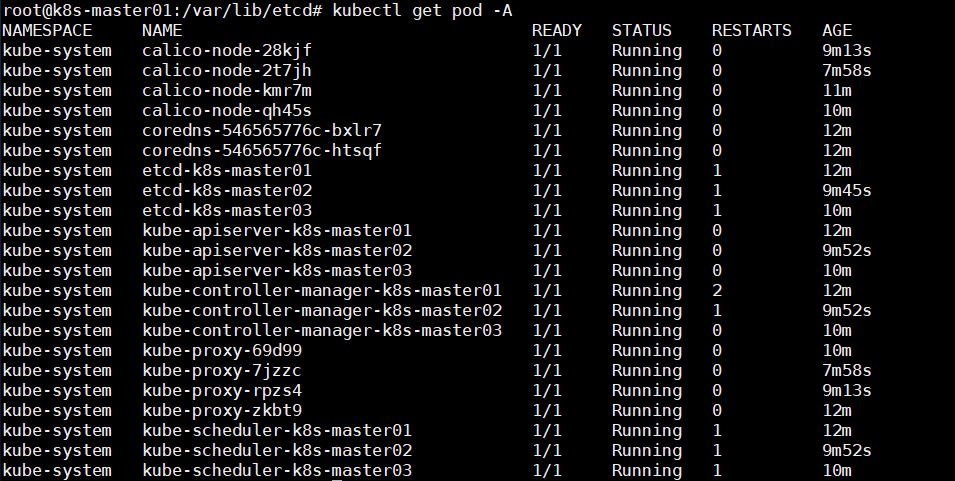

Etcd数据恢复 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 # 停止集群三台master节点的kubelet服务 systemctl stop kubelet # etcd数据存放目录是/var/lib/etcd/,此目录是容器挂载宿主机的/var/lib/etcd/。删除宿主机的/var/lib/etcd/目录就是清空etcd容器的数据 # 清空三台master节点 etcd容器数据 rm -rf /var/lib/etcd/ # 恢复etcd数据 -> k8s-master01 export ETCDCTL_API=3 etcdctl snapshot restore /etcd_backup/etcd-snapshot-`date +%Y%m%d`.db \ --cacert=/etc/kubernetes/pki/etcd/ca.crt \ --cert=/etc/kubernetes/pki/etcd/peer.crt \ --key=/etc/kubernetes/pki/etcd/peer.key \ --name=master01 \ --data-dir=/var/lib/etcd \ --skip-hash-check \ --initial-advertise-peer-urls=https://192.168.10.81:2380 \ --initial-cluster "master01=https://192.168.10.81:2380,master02=https://192.168.10.82:2380,master03=https://192.168.10.83:2380" -> k8s-master02 export ETCDCTL_API=3 etcdctl snapshot restore /root/etcd-snapshot-`date +%Y%m%d`.db \ --cacert=/etc/kubernetes/pki/etcd/ca.crt \ --cert=/etc/kubernetes/pki/etcd/peer.crt \ --key=/etc/kubernetes/pki/etcd/peer.key \ --name=master02 \ --data-dir=/var/lib/etcd \ --skip-hash-check \ --initial-advertise-peer-urls=https://192.168.10.82:2380 \ --initial-cluster "master01=https://192.168.10.81:2380,master02=https://192.168.10.82:2380,master03=https://192.168.10.83:2380" -> k8s-master03 export ETCDCTL_API=3 etcdctl snapshot restore /root/etcd-snapshot-`date +%Y%m%d`.db \ --cacert=/etc/kubernetes/pki/etcd/ca.crt \ --cert=/etc/kubernetes/pki/etcd/peer.crt \ --key=/etc/kubernetes/pki/etcd/peer.key \ --name=master03 \ --data-dir=/var/lib/etcd \ --skip-hash-check \ --initial-advertise-peer-urls=https://192.168.10.83:2380 \ --initial-cluster "master01=https://192.168.10.81:2380,master02=https://192.168.10.82:2380,master03=https://192.168.10.83:2380" # 三台master开启kubelet服务 systemctl start kubelet # 查看etcd是否健康 ETCDCTL_API=3 etcdctl --endpoints https://192.168.10.81:2379,https://192.168.10.82:2379,https://192.168.10.83:2379 \ --cacert=/etc/kubernetes/pki/etcd/ca.crt \ --cert=/etc/kubernetes/pki/etcd/peer.crt \ --key=/etc/kubernetes/pki/etcd/peer.key \ endpoint health

k8s运行正常