Istio ServiceEntry

ServiceEntry 是通常用于在 Istio 网格之外启用对服务的请求

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| # 现有环境

kubectl get gw

#mygw

kubectl get vs

#myvs ["mygw"] ["aa.yuan.cc"]

kubectl get dr

#mydr svc1

kubectl get pods

#pod1

#pod2

kubectl exec -it pod1 -- bash

curl https://www.baidu.com #可以访问到外网,代表所有的数据都是可以出去的

# serviceEntry 就可以解决这个问题

|

创建 ServiceEntry

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

| mkdir chap4 && cd chap4

cp ../chap3/vs.yaml ../chap3/mygw1.yaml ./

vim se1.yaml

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: myse

spec:

hosts:

- www.baidu.com

ports:

- number: 443

name: https

protocol: HTTPS

resolution: DNS

location: MESH_EXTERNAL

kubectl apply -f se1.yaml

kubectl exec -it pod1 -- bash

curl https://www.baidu.com

vim se1.yaml

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: myse

spec:

hosts:

- www.baidu.com

ports:

- number: 443

name: https

protocol: HTTPS

resolution: STATIC

location: MESH_EXTERNAL

endpoints:

- address: 192.168.26.3

kubectl apply -f se1.yaml

kubectl exec -it pod1 -- bash

curl https://www.baidu.com

|

默认策略

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

|

kubectl edit configmap istio -n istio-system -o yaml

data:

mesh: |-

accessLogFile: /dev/stdout

defaultConfig:

discoveryAddress: istiod.istio-system.svc:15012

proxyMetadata: {}

tracing:

zipkin:

address: zipkin.istio-system:9411

enablePrometheusMerge: true

outboundTrafficPolicy:

mode: REGISTRY_ONLY

rootNamespace: istio-system

istioctl install --set profile=demo -y --set meshConfig.outboundTrafficPolicy.mode=REGISTRY_ONLY

kubectl exec -it pod1 -- bash

curl https://www.jd.com

curl svc1

vim /root/podx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: podx

name: podx

spec:

terminationGracePeriodSeconds: 0

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: podx

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

kubectl apply -f /root/podx.yaml

kubectl expose --name=svcx pod podx --port=80

kubectl exec -it pod1 -- bash

curl svcx

kubectl apply -f podx.yaml -n default

kubectl expose --name=svcx pod podx --port=80 -n default

------------

kubectl get pods -n default

kubectl delete pod podx -n default

kubectl get svc svcx -n default

kubectl delete svc svcx -n default

vim se1.yaml

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: myse

spec:

hosts:

- www.baidu.com

- www.jd.com

ports:

- number: 443

name: https

protocol: HTTPS

resolution: DNS

location: MESH_EXTERNAL

kubectl apply -f se1.yaml

kubectl exec -it pod1 -- bash

curl https://www.jd.com

curl https://www.baidu.com

curl https://www.qq.com

kubectl edit configmap istio -n istio-system -o yaml

outboundTrafficPolicy:

mode: ALLOW_ANY

kubectl delete -f se1.yaml

|

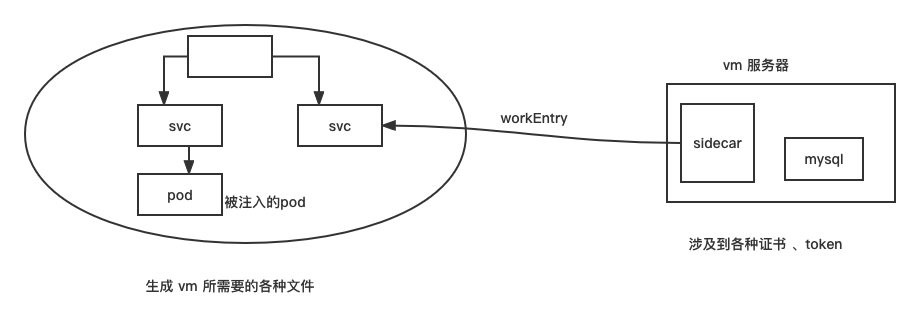

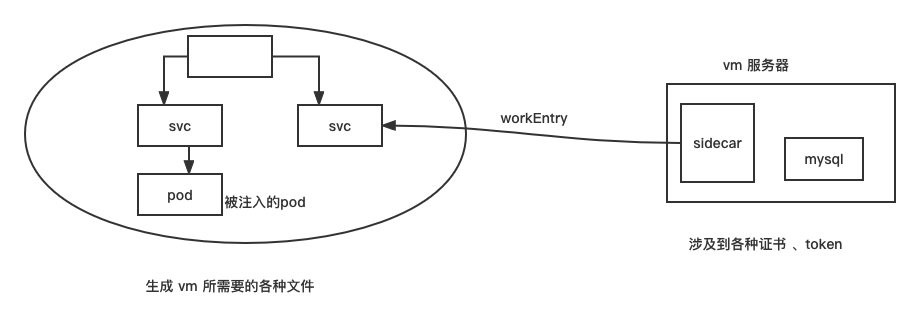

workEntry

**目的是把 其他主机/虚拟机/服务器 纳入到service mesh里 **

此处现将我们现有的测试环境做一套快照,不然环境弄乱了不好恢复…..

1

2

3

| istioctl install --set profile=demo --set

values.pilot.env.PILOT_ENABLE_WORKLOAD_ENTRY_AUTOREGISTRATION=true

|

1

2

3

4

5

6

7

8

9

10

| istioctl install --set profile=demo --set values.pilot.env.PILOT_ENABLE_WORKLOAD_ENTRY_AUTOREGISTRATION=true --set meshConfig.defaultConfig.proxyMetadata.ISTIO_META_DNS_CAPTURE='\"true\"'

#此处本地的测试环境启动智能DNS会失败,就启动自动注册就好

istioctl install --set profile=demo --set values.pilot.env.PILOT_ENABLE_WORKLOAD_ENTRY_AUTOREGISTRATION=true

kubectl get pods -n istio-system -o wide

#istiod 10.244.186.142

kubeadm config view

# podSubnet: 10.244.0.0/16

|

新创建虚拟机进行测试

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| #创建虚拟机 IP:192.168.26.23, ping k8s pod 的 ip 是 ping 不通的

ping 10.244.186.142 #不通

#添加网关

route add -net 10.244.0.0 gw 192.168.26.82 netmask 255.255.0.0 #gw的ip是gw所在节点的IP,可以写进配置文件中永久生效

ping 10.244.186.142 #可以访问了

#

https://storage.googleapis.com/istio-release/releases/1.10.3/deb/istio-sidecar.deb

dpkg -i istio-sidecar.deb

https://storage.googleapis.com/istio-release/releases/1.10.3/rpm/istio-sidecar.rpm

rpm -ivh istio-sidecar.rpm

|

创建 WorkloadGroup

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

| cd chap4 && vim mywg.yaml

apiVersion: networking.istio.io/v1alpha3

kind: WorkloadGroup

metadata:

name: mywg

namespace: ns1

spec:

metadata:

annotations: {}

labels:

app: test

template:

ports: {}

serviceAccount: sa1

kubectl get wg

kubectl apply -f mygw.yaml && kubectl get wg

kubectl get sa

kubens

kubectl create sa sa1

mkdir 11

istioctl x workload entry configure -f mywg.yaml -o 11

ls 11

scp -r 11/ 192.168.26.23:~

|

虚拟机的操作

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| # centos 7.6

# 在虚拟机上安装根证书

mkdir -p /etc/certs

cp 11/root-cert.pem /etc/certs/root-cert.pem

# 安装令牌

mkdir -p /var/run/secrets/tokens

cp 11/istio-token /var/run/secrets/tokens/istio-token

cp 11/cluster.env /var/lib/istio/envoy/cluster.env

cat /var/lib/istio/envoy/cluster.env #可以看到集群生成的一些配置信息

# 将网格配置安装到/etc/istio/config/mesh

cp 11/mesh.yaml /etc/istio/config/mesh

mkdir -p /etc/istio/proxy

chown -R istio-proxy /var/lib/istio /etc/certs /etc/istio/proxy /etc/istio/config /var/run/secrets /etc/certs/root-cert.pem #安装完 sidecar 自动生成 istio-proxy 的用户

# 修改/etc/hosts

#kubectl get pods -o wide -n istio-system 拿到 istiod 的 IP 地址

10.244.186.142 istiod.istio-system.svc

# 查看并启动 istio 的 sidecar

systemctl list-unit-files | grep istio

#disabled

systemctl start istio.service

systemctl enable istio.service #设置上开机自启动

systemctl is-active istio.service

#activating

# 查看日志

tail -f /var/log/istio/istio.log

#报有 eroor 。。。

#尝试换一个系统,用 ubuntu 试一下

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

| # 使用 ubuntu 18.04 试一下,ip 改为 192.168.26.23 ,再做一遍上面的操作

https://storage.googleapis.com/istio-release/releases/1.10.3/deb/istio-sidecar.deb

dpkg -i istio-sidecar.deb

# 后把11目录及里面的东西拷贝到虚拟机

scp -r 11/ 192.168.26.23:~ #冲突那就是ssh的hosts有问题,删掉就可以了

vim ~/.ssh/known_hosts #删除192.168.26.23信息

scp -r 11/ 192.168.26.23:~

# 在虚拟机上安装根证书

mkdir -p /etc/certs

cp 11/root-cert.pem /etc/certs/root-cert.pem

# 安装令牌

mkdir -p /var/run/secrets/tokens

cp 11/istio-token /var/run/secrets/tokens/istio-token

cp 11/cluster.env /var/lib/istio/envoy/cluster.env

cat /var/lib/istio/envoy/cluster.env #可以看到集群生成的一些配置信息

# 将网格配置安装到/etc/istio/config/mesh

cp 11/mesh.yaml /etc/istio/config/mesh

mkdir -p /etc/istio/proxy

chown -R istio-proxy /var/lib/istio /etc/certs /etc/istio/proxy /etc/istio/config /var/run/secrets /etc/certs/root-cert.pem #安装完 sidecar 自动生成 istio-proxy 的用户

# 修改/etc/hosts

#kubectl get pods -o wide -n istio-system 拿到 istiod 的 IP 地址

10.244.186.142 istiod.istio-system.svc

#添加路由

route add -net 10.244.0.0 gw 192.168.26.82 netmask 255.255.0.0

# 删除路由

route delete 10.244.0.0 dev ens32 #SIOCDELRT: No such process

route -n #查看路由

route delete gw 10.244.0.0 dev ens32 #gw: Host name lookup filure

route -n #查看路由

ping 10.244.186.142 #测试能否 ping 通,此时是 ping 通的

# 查看并启动istio

systemctl is-active istio #inactive 还没有启动

systemctl start istio && systemctl enable istio

systemctl is-active istio #active 已经启动了起来

# 查看日志

tail -f /var/log/istio/istio.log #已经启动了,日哦 可以了,看来centos没有ubuntu支持的好

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

| kubectl get we

mkdir xx && cd xx

vim we.yaml

apiVersion: networking.istio.io/v1beta1

kind: WorkloadEntry

metadata:

name: mywe

namespace: ns1

spec:

serviceAccount: sa1

address: 192.168.26.23

labels:

app: test

---

apiVersion: v1

kind: Service

metadata:

name: vm2-svc

namespace: ns1

labels:

app: test

spec:

ports:

- port: 80

name: http-vm

targetPort: 80

selector:

app: test

kubectl apply -f we.yaml

kubectl get svc

kubectl delete svc svcx

python3 -m http.server 80

kubectl exec -it pod1 -- bash

curl vm2-svc

apt-get install nginx -y

echo "hello vm vm"

systemctl start nginx

kubectl exec -it pod1 -- bash

curl vm2-svc

--------------------------------------------------------------

cd xx

kubectl delete -f we.yaml

cd ../ && kubectl delete -f mywg.yaml

|

外部服务器与网格内服务通信

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| cd chap4

kubectl apply -f mywg.yaml

cd xx && kubectl apply -f we.yaml

kubectl get gw

kubectl get vs

cp vs.yaml vs2.yaml

vim vs2.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: myvs2

spec:

hosts:

- "bb.yuan.cc"

gateways:

- mygw

http:

- route:

- destination:

host: vm2-svc

kubectl apply -f vs2.yaml

vim /etc/hosts

192.168.26.230 bb.yuan.cc bb

curl bb.yuan.cc

|